Runtimes

A runtime in Gradient Notebooks is the combination of a workspace or repo and a container. Runtimes are used to create environments that are exactly reproducible by others.

Introduction to runtimes in notebooks

In Gradient Notebooks, a runtime is defined by its container and workspace.

A workspace is the set of files managed by the Gradient Notebooks IDE while a container is the DockerHub or NVIDIA Container Registry image installed by Gradient.

A runtime does not specify a particular machine or instance type. One benefit of Gradient Notebooks is that runtimes can be swapped across a wide variety of instances.

When creating a new notebook, Gradient offers a list of runtimes to select sorted by Recommended and All. We can use these runtime tiles or we can create our own runtimes.

How to use a runtime provided by Gradient

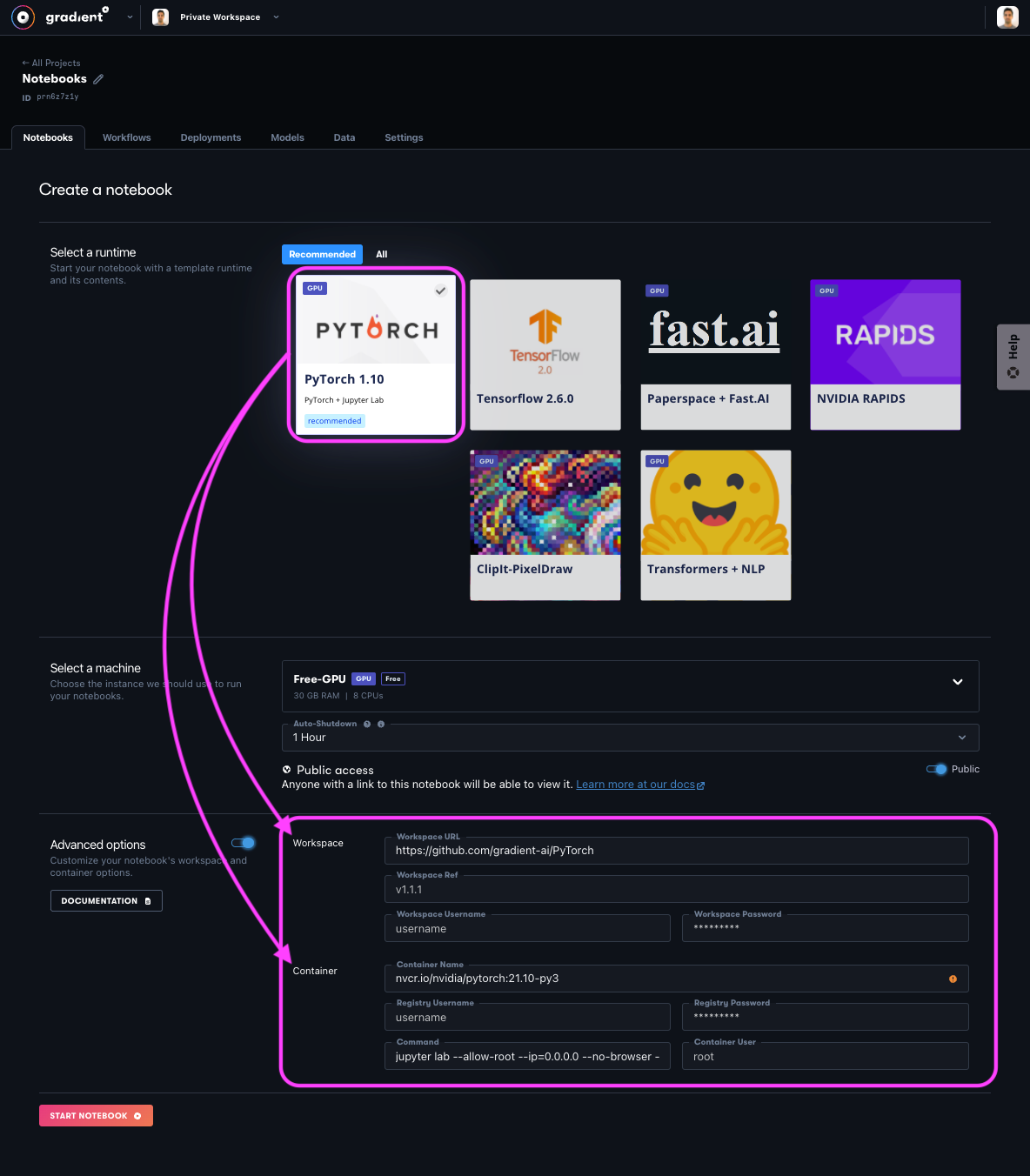

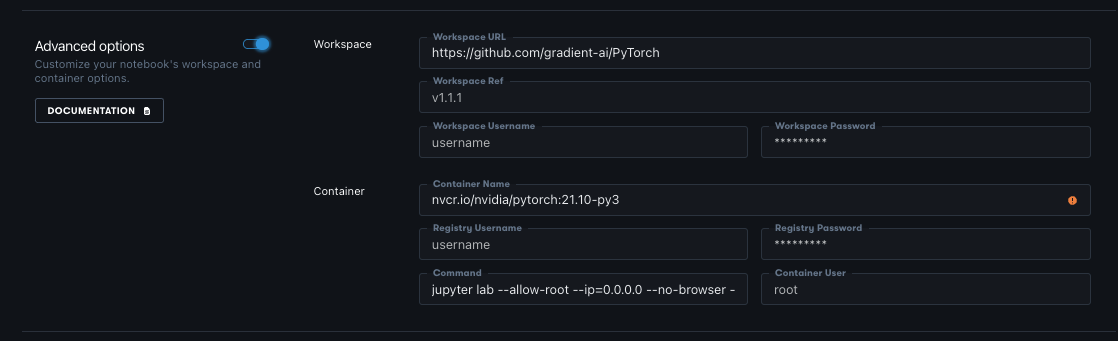

To explore the details of a particular runtime provided by Gradient, expand the Advanced Options section of the Create a notebook flow.

In this example, we will explore the PyTorch 1.10 runtime tile:

The PyTorch 1.10 tile consists of the following:

- Workspace URL -

https://github.com/gradient-ai/PyTorch - Container Name -

nvcr.io/nvidia/pytorch:21.10-py3

The Workspace URL parameter tells Gradient which files to load into the notebook file manager.

The Container Name parameter tells Gradient where to fetch the container image that will be used to build the notebook.

When we select Start Notebook, Gradient imports the file(s) located in the Workspace URL directory into the file manager and then imports and runs the container image specified defined in the Container Name field.

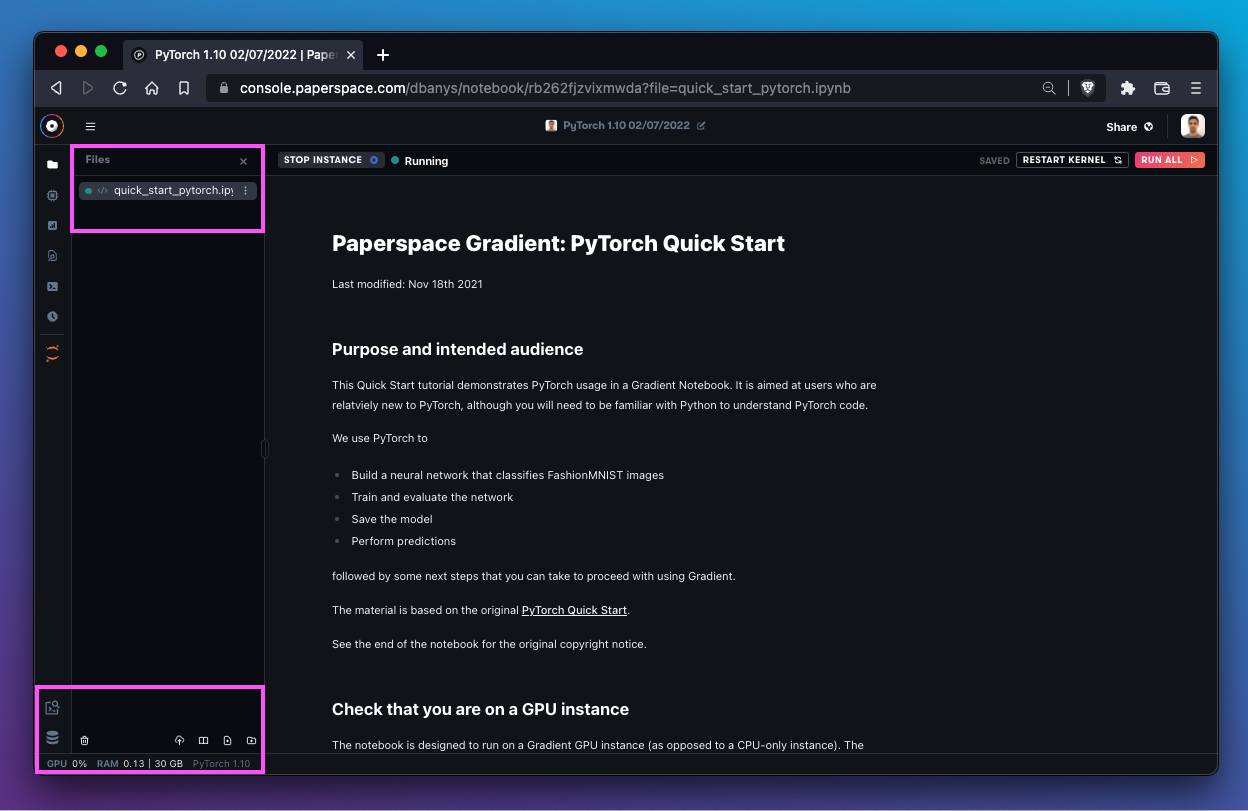

In this example, our new PyTorch 1.10 notebook contains the files from https://github.com/gradient-ai/PyTorch running on the container from nvcr.io/nvidia/pytorch:21.10-py3.

We can confirm this fact by noticing that our file manager is populated with the contents from the GitHub repository (in this case a single file) and that the bottom bar is displaying the name of the container image as expected.

List of base runtimes

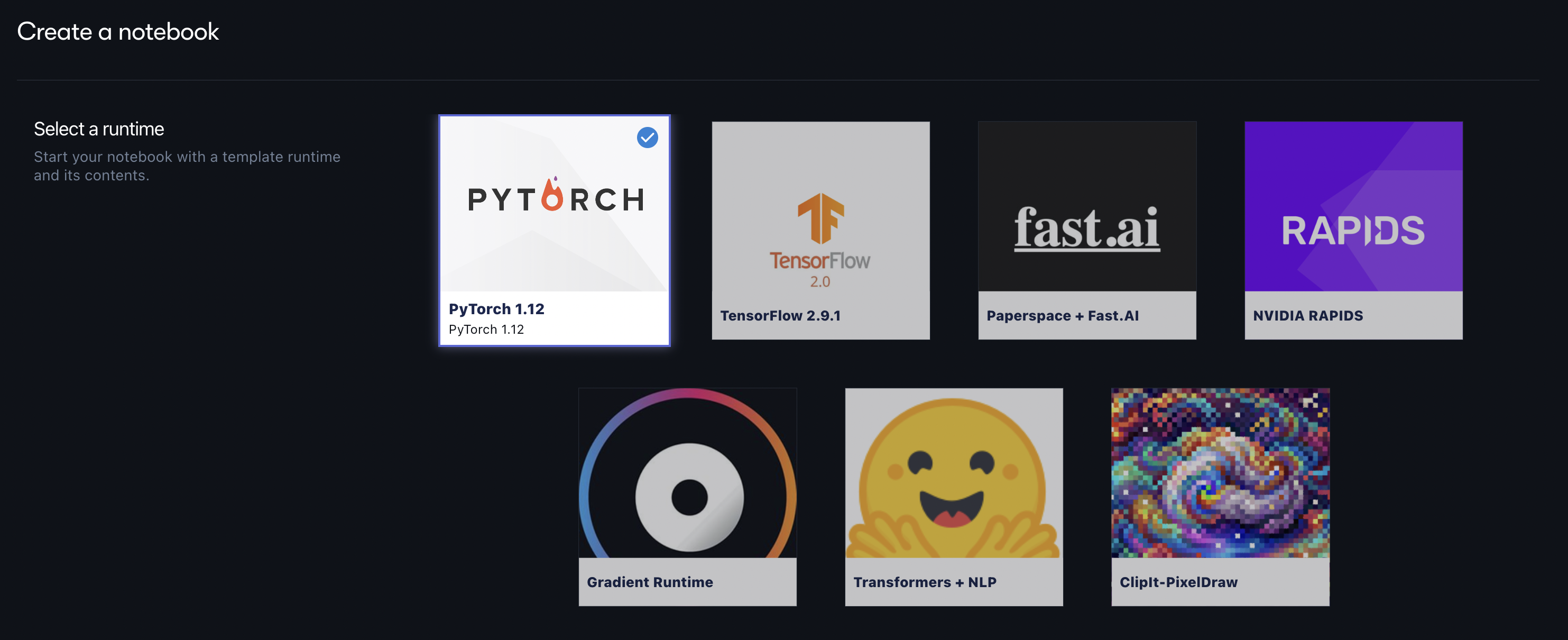

Paperspace maintains a number of runtimes that make it easy to get started with preloaded notebook files and dependencies. These runtimes are provided as tiles when creating a new notebook in Gradient.

Recommended runtimes

The following is a list of recommended runtimes that Paperspace maintains:

| Name | Description | Container Name | Container Registry | Workspace URL |

|---|---|---|---|---|

| PyTorch 1.12 | Latest PyTorch release (1.12) with GPU support. | paperspace/gradient-base:pt112-tf29-jax0314-py39-20220803 | DockerHub | https://github.com/gradient-ai/PyTorch |

| TensorFlow 2.9.1 | TensorFlow 2 with GPU support. | paperspace/gradient-base:pt112-tf29-jax0314-py39-20220803 | DockerHub | https://github.com/gradient-ai/TensorFlow |

| Start from Scratch | Include libraries from machine learning from the pytorch, tensorflow and jax communities. | paperspace/gradient-base:tf29-pt112-py39-2022-06-29 | DockerHub | N/A |

| Paperspace + Fast.ai | Fast.ai Paperspace's Fast.ai template is built for getting up and running with the enormously popular Fast.ai online MOOC. | paperspace/fastai:2.0-fastbook-2022-06-29 | DockerHub | https://github.com/fastai/fastbook.git |

| NVIDIA RAPIDS | NVIDIA's library to execute end-to-end data science and analytics pipelines on GPU. | rapidsai/rapidsai:22.06-cuda11.0-runtime-ubuntu18.04-py3.8 | NVIDIA | https://github.com/gradient-ai/RAPIDS.git |

| ClipIt-PixelDraw | A creative library for generating pixel art from simple text prompts. | paperspace/clip-pixeldraw:jupyter | DockerHub | https://github.com/gradient-ai/ClipIt-PixelDraw |

| Hugging Face Transformers + NLP | A state-of-the-art NLP library from Hugging Face | paperspace/gradient-base:pt112-tf29-jax0314-py39-20220803 | DockerHub | https://github.com/huggingface/transformers.git |

| DALL-E Mini | An impressive image generation model that runs on JAX. | paperspace/gradient-base:pt112-tf29-jax0314-py39-20220803 | DockerHub | https://github.com/huggingface/transformers.git |

| Hugging Face Optimum on IPU | Run through various Hugging Face tutorials optimized for Graphcore IPUs. | graphcore/pytorch-jupyter:2.6.0-ubuntu-20.04-20220804 | DockerHub | https://github.com/gradient-ai/Graphcore-HuggingFace |

| PyTorch on IPU | Run through various PyTorch tutorials optimized for Graphcore IPUs. | graphcore/pytorch-jupyter:2.6.0-ubuntu-20.04-20220804 | DockerHub | https://github.com/gradient-ai/Graphcore-Pytorch |

| Tensorflow 2 on IPU | Run through several Tensorflow 2 tutorials optimized for Graphcore IPUs. | graphcore/tensorflow-jupyter:2-amd-2.6.0-ubuntu-20.04-20220804 | DockerHub | https://github.com/gradient-ai/Graphcore-Tensorflow2 |

Previous runtime versions

These are a sampling of previous runtime verions which you can run by copying the desired runtime's values for the Container Name and Workspace URL into the Advanced Options menu when creating a Notebook. Runtimes are added and updated frequently.

| Name | Description | Container Name | Container Registry | Workspace URL |

|---|---|---|---|---|

| Python 3.9 | Python 3.9 with GPU support. | paperspace/python-runtime:py39-2022-07-06 | DockerHub | N/A |

| PyTorch 1.11 | Latest PyTorch release (1.8) with GPU support. | paperspace/nb-pytorch:22.02-py3 | DockerHub | https://github.com/gradient-ai/PyTorch |

| TensorFlow 2.7.0 | TensorFlow 2 with GPU support. | paperspace/nb-tensorflow:22.02-tf2-py3 | DockerHub | https://github.com/gradient-ai/TensorFlow |

| Paperspace + Fast.ai | Fast.ai Paperspace's Fast.ai template is built for getting up and running with the enormously popular Fast.ai online MOOC. | paperspace/fastai:2.0-fastbook-2022-05-09-rc3 | GitHub | https://github.com/fastai/fastbook.git |

| Hugging Face Transformers | A state-of-the-art NLP library from Hugging Face | paperspace/nb-transformers:4.17.0 | DockerHub | https://github.com/huggingface/transformers.git |

| Analytics Vidhya CV | Analytics Vidhya container | jalfaizy/cv_docker:latest | GitHub | N/A |

| TensorFlow (1.14 GPU) | Official docker images for TensorFlow version 1 | paperspace/dl-containers:tensorflow1140-py36-cu100-cdnn7-jupyter | DockerHub | N/A |

| JupyterLab Data Science Stack | Jupyter Notebook Data Science Stack | jupyter/datascience-notebook | DockerHub | N/A |

| JupyterLab Data R Stack | Jupyter Notebook R Stack | jupyter/r-notebook | DockerHub | https://github.com/gradient-ai/R.git |

How to specify a custom workspace

Gradient provides the ability to import a workspace during notebook creation.

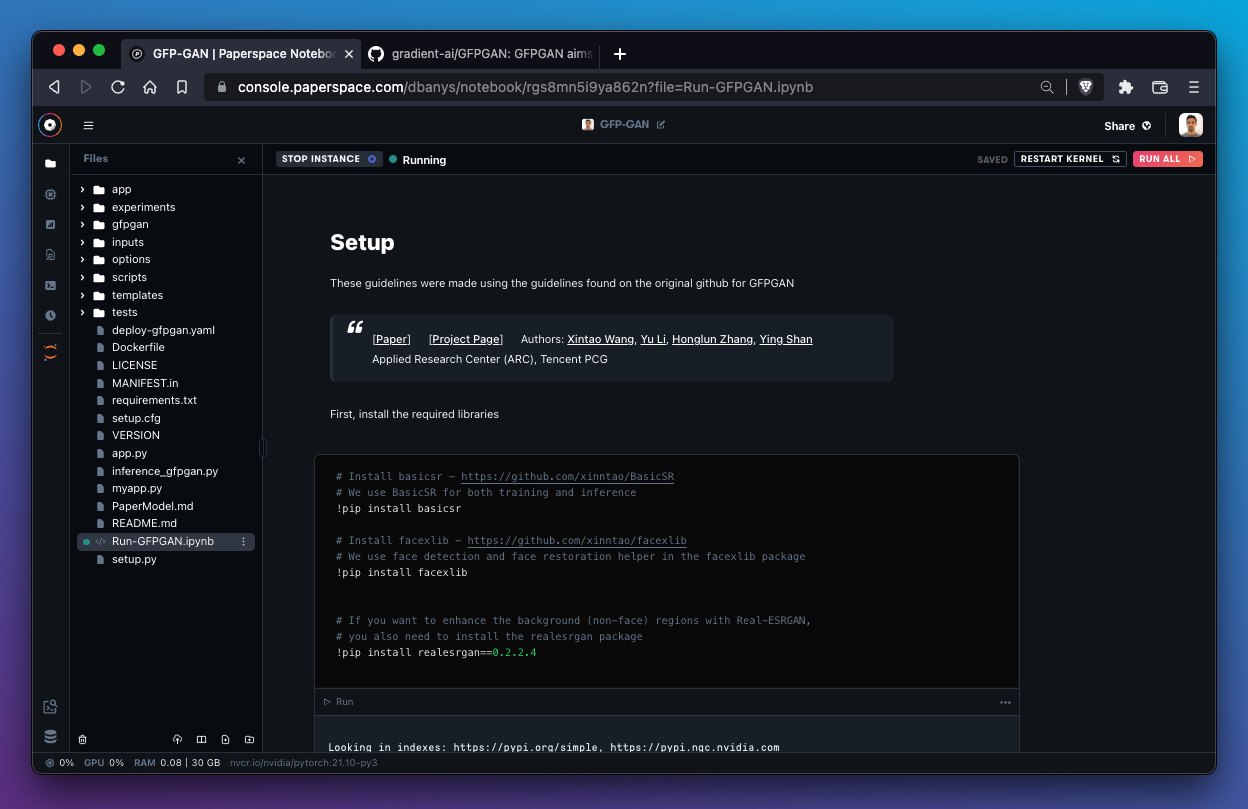

Let's say we're interested in working with GFP-GAN, an exciting library that helps us restore and upscale images. Rather than clone the files from GitHub after we create the notebook, we can instantiate the notebook with the files already there.

All we need to do is specify the GFP-GAN GitHub repo in the Workspace URL parameter of Advanced options during notebook creation.

Gradient automatically copies all of the files from the GitHub repo into the notebook IDE.

The resulting notebook looks like this:

A custom workspace can be specified at the time of notebook creation only.

It's also possible to pull files from private or password-protected repositories by filling in Workspace Username and Workspace Password fields.

The same logic can be applied to containers, which is demonstrated in the next section.

How to specify a custom container

A container is a disk image pre-loaded with files and dependencies. Gradient provides the ability to import an image from a container registry such as DockerHub during notebook creation.

In this example, we'll tell Gradient to pull the latest container from NVIDIA RAPIDS by specifying the Docker image in the Container Name field.

Note that the larger the container, the longer it will take for Gradient to pull it into a notebook.

How to create a custom Docker container to use with Gradient Notebooks

Paperspace recommends using Docker to get the container image from the local machine to Gradient.

jupyter must be run on port 8888 and connections from ip address 0.0.0.0 must be allowed.

If running jupyter notebook, the following flags must be included in the Command field to support the Gradient Notebooks IDE:

--no-browser --NotebookApp.trust_xheaders=True --NotebookApp.disable_check_xsrf=False --NotebookApp.allow_remote_access=True --NotebookApp.allow_origin='*'

If running jupyter lab, these flags should be used in the Command field instead:

--no-browser --LabApp.trust_xheaders=True --LabApp.disable_check_xsrf=False --LabApp.allow_remote_access=True --LabApp.allow_origin='*'

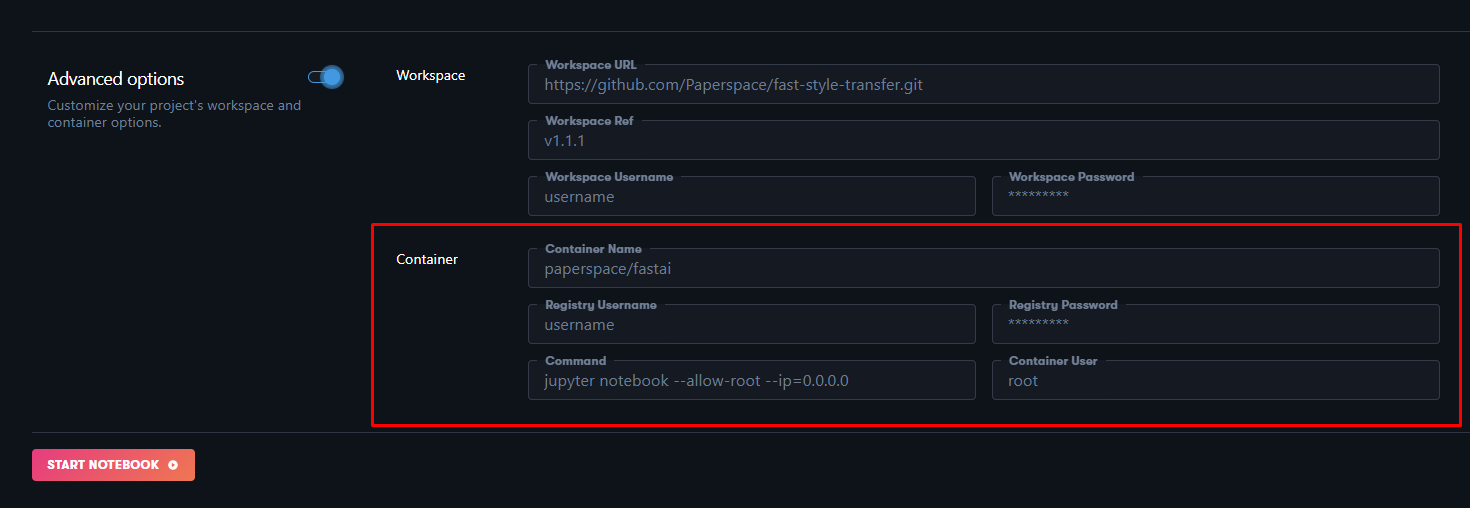

Custom container reference

The following fields are available in the Container section of Advanced options when creating a new Gradient Notebook:

| Field | Required | Description |

|---|---|---|

| Container Name | true | Path and tags of image from DockerHub or NVIDIA Container Registry. E.g. ufoym/deepo:all-jupyter-py36 |

| Registry Username | false | Private container registry username. Can be left blank for public images. |

| Registry Password | false | Private container registry password. Can be left blank for public images. Secrets may be used in this field using the substitution syntax secret: |

| Command | false | Must be Jupyter compatible. If left blank, defaults to jupyter notebook --allow-root --ip=0.0.0.0 --no-browser --NotebookApp.trust_xheaders=True --NotebookApp.disable_check_xsrf=False --NotebookApp.allow_remote_access=True --NotebookApp.allow_origin='*' |

| Container User | false | Optional user. Defaults to 'root' if left blank. |